Ladle From the Feature Stream

Agility is not easily adopted. This of course has many reasons, many of them having to do with deeply rooted beliefs, habits, and policies. But there also seems to be misunderstanding at work.

The more agile or not so agile teams/organizations I see, the more I think there is a fundamental flaw in how they understand and use iterations.

Iterations are very prominent in the probably most prevalent agile process: Scrum. The whole software production effort revolves around Sprints. Software is developed Sprint after Sprint after Sprint.

Anatomy of a Sprint

Sprints are defined to have a particular structure: They begin with a Sprint Planning meeting where the Product Owner negotiates with the developers which User Stories to produce. They end with a Sprint Review meeting to show-off what has been accomplished during the Sprint, i.e. to gather feedback from stakeholders. And finally the team reflects on how the Sprint worked out during the Sprint Retrospective.

The goal of each Sprint is to deliver valuable software at the end, to always produce code which can be released. That’s why progress is made in increments described in User Stories each describing a feature of the software from the perspective of the user/customer or more generally a stakeholder.

Sprints violate the Single Responsibility Principle

Scrum’s idea of a Sprint sounds perfectly reasonable. Nevertheless it’s quite difficult to live by it for many organizations. Why’s that? My guess is, the reason lies in several different aspects of software production being tangled up in a Sprint. Sprints (or more generally: iterations) are overburdened with purpose. Sprints have been assigned too many responsibilities.

I believe the Single Responsibility Principle (SRP) is not only relevant for software structures but also for organizational structures. Developers should be developers only, Product Owners should be Product Owners only, managers should be managers only etc.

Unfortunately many managers try to also be Product Owners or ask developers to be Product Owners as well etc. This does not work out. It’s a recipe for many frictions if not for disaster.

The same holds true for Sprints. Sprints should have only one purpose. Which would be…? Here’s what I think Sprints are supposed to accomplish:

- Sprints are understood as the periods of software delivery. At the end of each Sprint software should be rolled out to users.

- Sprints are understood as periods of code production. Each Sprint the developers commit themselves to a batch of features to produce. Reliable production of those features is of high importance.

- Sprints are understood to help forecasting User Story production. Not only do Product Owners want to know how much developers will have accomplished at the end of a Sprint, also managers want to look further into the future. For that purpose the effort needed to implement each User Story is estimated at the beginning of each Sprint. Resulting total numbers are then compared to other Sprints and used to extrapolate progress.

- Sprints are understood as periods of feedback from stakeholders. Although the Product Owner could give feedback during the Sprint, the official feedback „event“ is at the end. Only if User Stories pass muster then they can be considered really, really done.

- Sprints are understood as periods of fixed priorities. Scope and order of selected User Stories should not change. New User Stories can only be introduced in the next Sprint.

- Sprints are understood as periods on which the team should reflect. In order to constantly improve its abilities and performance the team need to assess how it’s doing and deliberate about changes.

That’s quite some purposes for Sprints, isn’t it? Maybe I’ve even forgotten a couple.

I think: No small wonder it’s so difficult to get Agility or Scrum right. So many goals to reach.

Simply put: Sprints are a tightly coupled set of responsibilities. That, to me, is in contradiction with fundamental principles of software development.

Decoupling Sprint responsibilities

In order to make the transition to Agility easier, all those responsibilities have to be disentangled. Each has to be addresses and implemented separately.

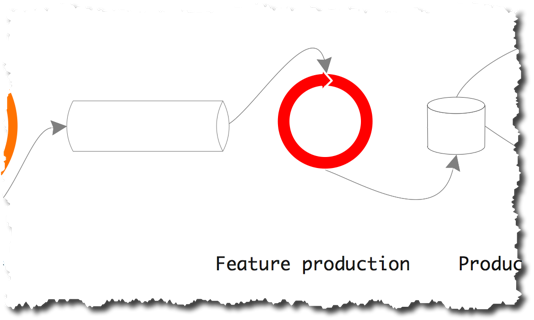

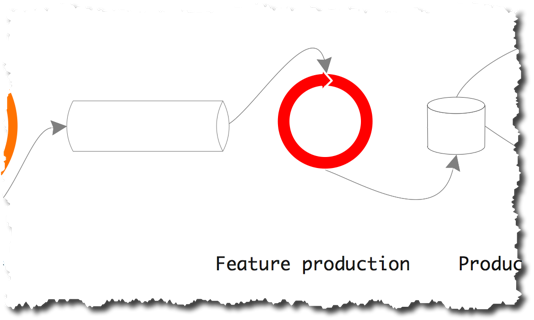

Feature production

The fundamental responsibility of software production is, well, code production. It’s about converting User Stories into implemented features. This should be done at a steady pace. Progress should be continuous.

The result of this is a flow of features as the output of programming. Yes, I mean a continuous flow. It should not be paced, there should be no cadence. Feature after feature after feature should flow from programming into a reservoir.

These features should be as much done as possible with the feedback from the Product Owner.

Maybe sometimes this flow is stronger or faster, maybe sometimes is weaker, slower. But it’s a constant flow.

And I’d even say, it should take a User Story only 16 hours at max to get transformed into a done feature flowing out into the reservoir. 16 hours is the time from 9AM today until 5PM tomorrow. That’s a time frame you can pretty much control; if need be you put a „Do not disturb!“ sign on your door or you do a day of home office or add some overtime. You start today and finish tomorrow and get feedback. It’s a kind of reliability horizon.

User Stories are picked from a never ending queue. How the queue is filled or why it’s ordered as it is, is not the concern of feature production. Feature production blindly dequeues whatever is waiting when there is capacity to work on a User Story. Of course this is only done within its WIP limit.

This results in a flow of features which can be steered in any direction at any time as need be by changing the content of the User Story queue. A change of a project’s course is possible whenever work on a User Story has ended.

Work in progress is never interrupted, canceled, or shelved before it’s done, though. This is to avoid waste.

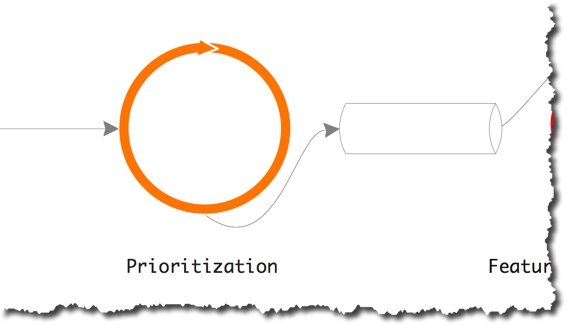

User Story prioritization

It’s the Product Owners responsibility to keep the feature production queue constantly filled. Whether she does that on a daily or weekly basis is up to her.

To me it seems natural as well as inevitable to be relaxed about this. To have a weekly meeting with developers and testers to talk through User Story candidates for the production queue seems like a good idea. It makes coordination of such synchronous communication easier.

Whereas feature production works with an iteration length of 4 to 16 hours the regular iteration length for user story prioritization is different, e.g. 7 days.

Except for when that’s too long because some issue has sprung up unexpectedly. Then the Product Owner changes the order or even content of the feature production queue at any time before her next iteration starts. And this does not „break“ an iteration. It’s just a perfectly natural reaction to changes in the environment. All else would be following a plan over responding to change, i.e. not living up to Agility’s core values.

I don’t call the feature production queue a backlog because that term to sounds like „lagging behind“. It’s subtly demotivating. It’s just a queue, i.e. an ordered list of User Stories with the currently most important one being the head.

What the criteria are for a User Story to enter the queue or to be put in a certain place therein is a matter of its own. That’s not important at the moment. Keeping the feature production queue filled is a black box like transforming User Stories into coded features. The important point is: both are decoupled by the queue.

Feedback

Feedback is of greatest importance for software development to avoid waste and progress quickly.

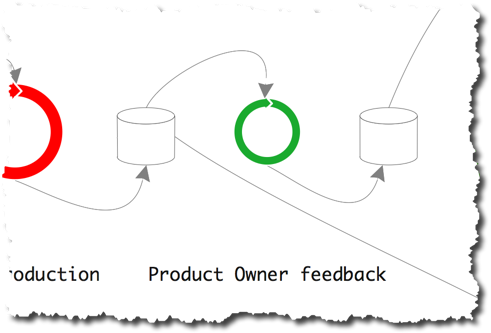

Product Owner feedback

First developers and testers give feedback to the Product Owner in prioritization meetings. They all have to agree on a User Stories readiness for the feature production queue.

Then the Product Owner gives feedback to developers and testers about a feature implementation. This should be done as immediately as possible after a feature has been finished. Preferably even before a new User Story is picked from the feature production queue.

It’s the first acceptance hurdle a feature has to take. And since the Product Owner is in constant touch with developers and testers anyway – that’s part of her job description – this should not be difficult to accomplish.

The Product Owner should set aside some time each day for such „acceptance tests,“ since each day programming will finish some feature.

This is the smallest human feedback cycle. Iteration length 1 day. And it’s taking input from a small reservoir of finished features filled by programming.

Stakeholder feedback

More feedback is needed, though. A Product Owner is not a user, not an IT admin, not the customer, not management. She’s only a proxy for those who actually want the software or will be using the software.

To be really sure software production is going in the right direction feedback from beyond the team has to be compiled. To gather such a motley crew at the same time in the same place is not easy, however. So this cannot happen at too high a frequency.

Maybe every week is ok, maybe every two weeks or three weeks. In any case there is no need whatsoever to couple the iteration for stakeholder feedback to other iterations! Let prioritization meetings happen every week and stakeholder feedback meetings every two. Why not?

Whoever gives feedback does so on whatever is in the reservoir constantly being filled by programming after the Product Owner gave her OK.

To synchronize the feedback cycles with any other iteration length to me seems artificial at best, and possibly harmful.

Delivery

Features approved by Product Owner and stakeholders flow into a reservoir. They are ready to be unleashed onto a wider audience.

Delivery/deployment ladles from this reservoir whatever and whenever they see fit. Maybe sales needs to scoop a new release for some key account, maybe marketing wants to prepare a trade show, maybe enough features are ready to let beta testers take a ride.

Whatever programming outputs is formally delivery ready. It just needs to pass the feedback gates Product Owner and stakeholders. Once it has past them it’s filling up the delivery reservoir.

The frequency with which features are released from there should be decoupled from any other iteration. Essentially this needs not even be periodic. Releases can be bundled up at any time, maybe every quarter, maybe unexpectedly next week to get a bug fix to all users.

Delivery thus should not be of any concern to programming. The Product Owner, though, will take delivery into account during preparation of User Stories and prioritization.

Forecasting

Why in all the world should forecasting be done based on any iteration? Has anyone ever done a forecast the weather for the next three day by looking at the weather of the past three days? Of course not. Has anyone ever predicted stock market development of the next three days based on the stock market of the past three days? Of course not.

Forecasts for any period always take into account an arbitrary long period in the past. You want to look three days into the future? Check the data for yesterday, the past three days, the past week, the past month… whatever.

Forecasting does not need any cycle length. Do it whenever you feel a need for it. Use a throughput metric you like. Right here I don’t want to argue about Story Points or whatever. Maybe you just count the number of features flowing out from programming or from Product Owner acceptance or from stakeholder acceptance.

Look at any stretch of time into the past. For example there could have been 10 features programmed the past week, or 18 the past two weeks, or 31 the past three weeks, or 37 the past four weeks, or 56 the past five weeks. What’s the right number to use to forecast what the team will accomplish the next week or month? Pick a sampling period as you like. And do that any time you like.

That said let me add: I’m very skeptical about the value of such forecasts. You simply cannot know when a feature will be ready for delivery – until it’s ready. That’s due to the nature of software development which is not like what a baker or plumber does; only 20% of software development are craftsman’s work. 80% are engineering and research, the duration of which you cannot estimate.

Usually forecasting/estimation understands software development as shooting a cannon ball. But firing off User Stories does not cause them to fly along a ballistic curve. You cannot aim for a fixed target.

Rather view software development as a baseball game. In order to catch a ball a field player needs to constantly observe the ball and adjust his course. The line he runs from where is stands to where he catches the ball is not straight. That would be possible if he could quite precisely estimate where a ball would hit the ground. But he cannot. He only sees it flying in some rough direction and then starts running in that rough direction while constantly adapting. The catcher runs along a curved line towards where he eventually will catch the ball.

The same you should do with software production and marketing or sales. Don’t give them an exact date when a feature set will be ready in the post stakeholder feedback reservoir. Instead constantly tell them about your progress towards this goal. Tell them, when the feature flow is high, when it’s slowing down, when it’s picking up again. Maybe you want to inform them periodically every week or two, maybe you do it irregularly whenever there are marked changes in flow.

Just don’t tie these notifications to any other cycle. They need to be independent to be able to stay reactive.

Reflection

Finally it’s prudent to halt periodically and reflection upon what the team did. What went well? What went wrong? How did we work together? Were there any impediments slowing progress, e.g. dependencies, policies, surprise situations? What can be done better in the future?

That’s questions to mull over during a Retrospective, how Scrum calls such reflection meetings.

Periodic reflections help build the collective consciousness of the team. As a self-organizing social system a team needs to watch itself, not just software as its product.

Essentially reflection meetings are the heart of team leadership. It’s where decisions are made, even ones to put decision making power in certain hands.

How often such reflection meetings should be called is a matter of team maturity and project context. Some teams should meet every week, others just every month. Or maybe sometimes an addition meeting is required to cope with an exceptional issue.

In any case there is no need to tie reflection meetings to any other iteration. Feature production is too fast, anyway. And there is no specific relationship between prioritization or feedback and reflection to warrant synchronization.

Orthogonal streams

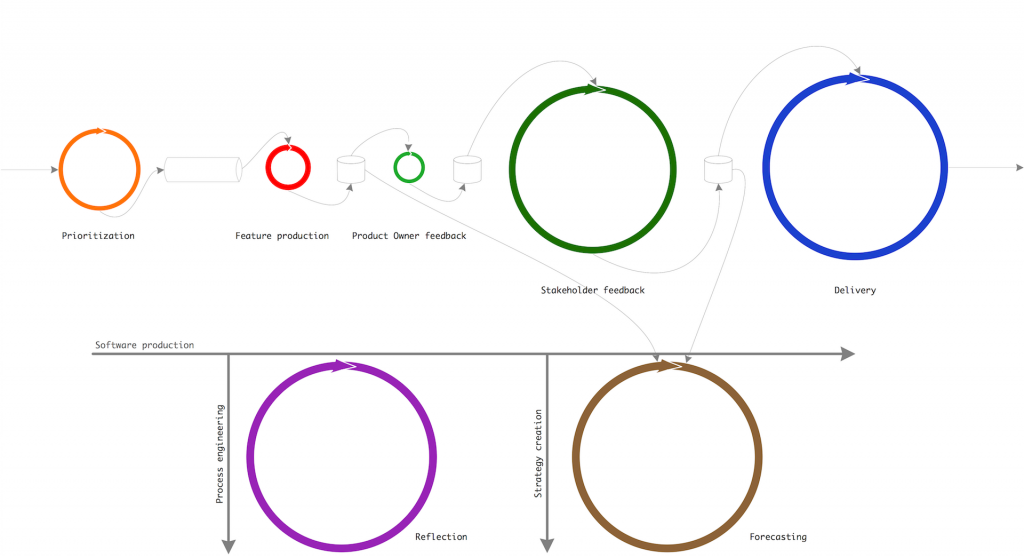

Putting all these singled out responsibilities back together results in a multi-dimensional production process:

There is a main production stream. Its product is high quality software. It spans from User Story prioritization to delivery. That’s the process software development is about. The flow through this process has to be smooth at all times to constantly produce value.

But then there are two additional orthogonal streams. One produces the production stream itself. That’s what reflection is about, or more generally: that’s what leadership is about in the first place. Leadership’s first responsibility is to create collaboration towards are goal.

The other produces strategic decisions which steer the collaborative production process. That’s also a responsibility of leadership: to create future viability. Here’s where forecasting has its place.

Iterative Software Development with Multiple Cycles

Software development happens in reaction to a complex world. To be able to deal with such a world, software development needs to match the world’s complexity.

In my view the usual „architecture“ of the agile software production process is trying to simplify too much. Relying on just one type of iteration in most cases seems to reduce the internal complexity too much. It’s making software production rigid.

Synchronizing the iteration length of several responsibilities might work in some situations, e.g. in small to medium green field projects. But overall that’s just a special case.

In a previous article I already described different iteration lengths nested within each other to gather feedback. But we've to do more. We've to decouple those loops.

As long as we don’t recognize the different responsibilities on their own which need to come together to fluently produce high quality software, we’ll have a hard time to implement them.

And once we differentiate between them, we need to put them together in a beneficial manner for our particular context. Each responsibility requires specific consideration and needs to be tweaked individually.

Maybe it’s ok to start with the Scrum iteration monolith - but always view the responsibilities as fundamentally independent building blocks.

I don’t like monolithic code bases, and I don’t like monolithic processes. To me the above picture somewhat looks like a micro-service architecture for software production: each responsibility happily plodding along on its own, each one transforming some input into some output, but all decoupled by shared resources (queue, reservoir).

This is not to say Scrum is bad. If you get along well with Scrum’s „one size fits all“ Sprint, all’s fine. Rather what I want to say is: Scrum or „single iteration thinking“ might not be the end, but just the start. A special case which works in some contexts, but which we have to abstract from. Maybe it’s time to refactor your production process to deeper insight, to borrow a phrase from Domain Driven Design.