Agile Dimensions of Feedback

Feedback in agile projects is core. It's what Agility revolves around to a great extent. Because only feedback tells you whether you're investing your time into quality or waste. And that's what Agility is about: constantly delivering quality in an ever shifting world.

The importance of feedback warrants a closer look. There is more than one dimension to it.

Frequency

What Agility most obviously did with feedback was to crank up its frequency. Instead of a development team getting formal feedback every couple of months after reaching a milestone, now feedback was to be given every couple of weeks. Agility proposes to work iteratively, with an iteration lasting 1 to 4 weeks. In Scrum that's called a sprint.

To avoid cargo cult in that matter, though, it's important to understand, what determines the feedback frequency. Why (and when) is a feedback cycle of 2 weeks better than one of 6 months?

Frequency is inversely proportional to certainty. If what is done very certainly is of high quality, then feedback frequency can be low; on the other hand if it's very uncertain whether what's in the making will be deemed of high quality, feedback frequency should be high.

Unfortunately there is quite some high uncertainty in all non-standardized environments. Industrialized production may be the poster child of certainty. But in software development on the other hand uncertainty is very high.

- Does the customer really know what is needed to solve a problem?

- How fast does the need change?

- Does the customer clearly and losslessly express what's currently needed?

- Does software development truly understand what has been expressed by the customer?

- Does software development flawlessly implement what has been understood?

And not only are these aspects of uncertainty, even the level of uncertainty is uncertain. We are prone to err on the side of certainty: We like to believe things are more certain than they are. The reasons for that range from personal to organizational, I'd say.

How high, then, should the feedback frequency be? I don't know for sure. But in case of doubt I'd say: even higher ;-) Or to paraphrase a proverb: If you think the frequency is too high already, then increase it even more.

Of course, feedback frequency has to be balanced with other things like undisturbed focus to produce or availability of relevant feedback givers.

Anyway: don't treat any frequency recommendation as dogma. 1 day, 1 week, 1 month... it all depends on the level of requirements certainty. Just keep in mind: uncertainty probably is much higher than you would like it to be.

Distance

The ultimately relevant feedback only comes from actual users of any product. So you might think: "If so much is uncertain about requirements, I'll ask the user every day for feedback." That's a great idea - but unfortunately mostly it's infeasible. Users simply cannot (or don't want to) give feedback every day.

People who are relevant to assess the quality of your work (stakeholders) are at different distances. They cannot be reached equally for feedback. Maybe the actually live at a distance, maybe there are too many to ask them all frequently, maybe they are too busy with other things... It's just reality.

That's why in Scrum there is a product owner. The product owner is an empowered and highly motivated envoy of all stakeholders. He/she is embedded in the production team to give high frequency feedback. And I suggest you set the feedback period to 1 day: work started today is approved by the product owner tomorrow at the end of the working day at latest.

Of course, work to be done until tomorrow still is an increment. It's something a stakeholder representative, i.e. an essentially non-technical person, can give feedback on at all. In Agility progress is made and measured only in such increments.

How to break down requirements into such small increments is an art in and of itself. It highly depends on the thing to produce. For software my idea on this is pretty clear; more about that in another article.

But still, the product owner is not the user. Although the product owner tries to "get into the users head" and know as exactly as possible what the user needs are... there is uncertainty in the product owner's understanding and judgement. That's why the product owner as a source of feedback never is enough.

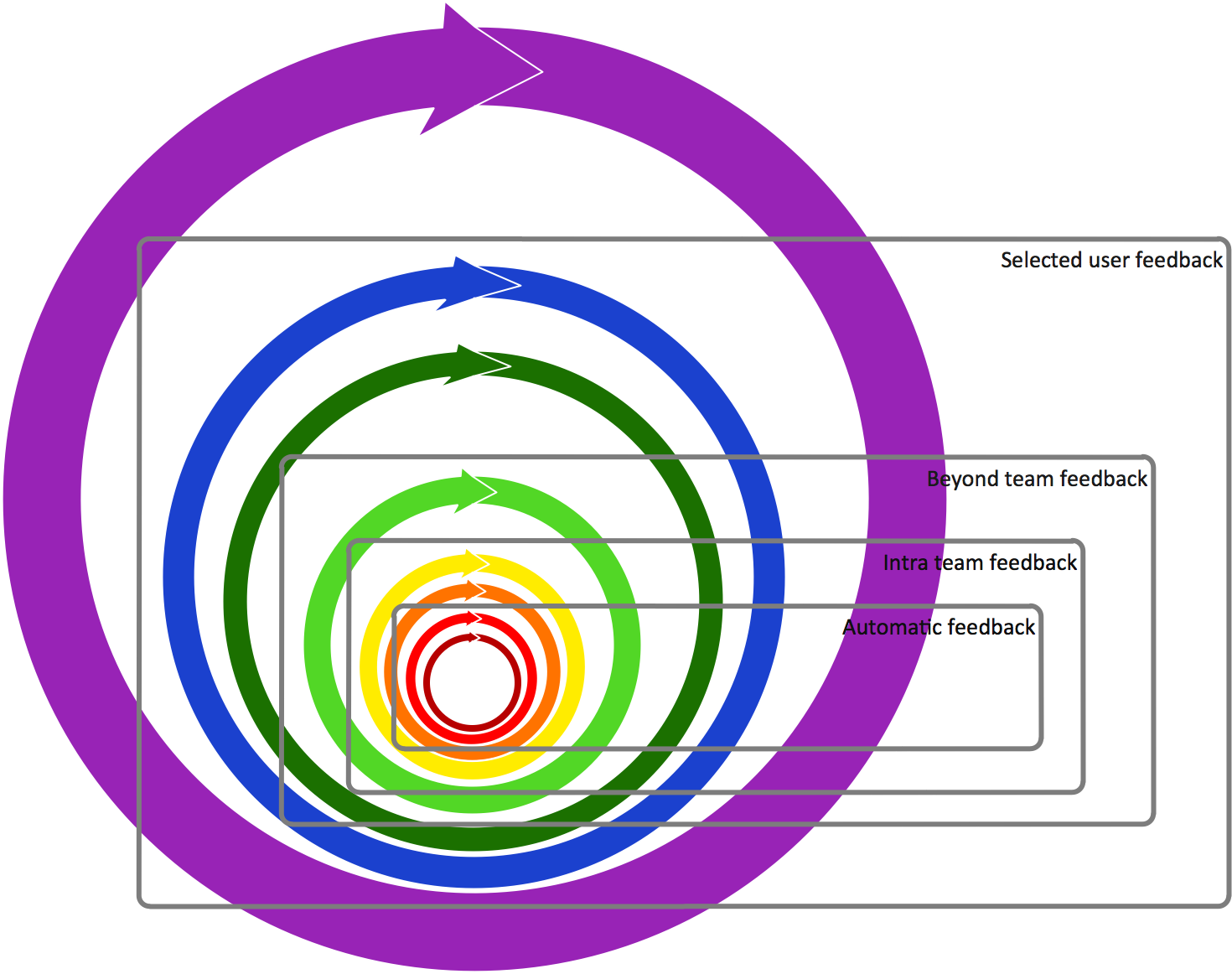

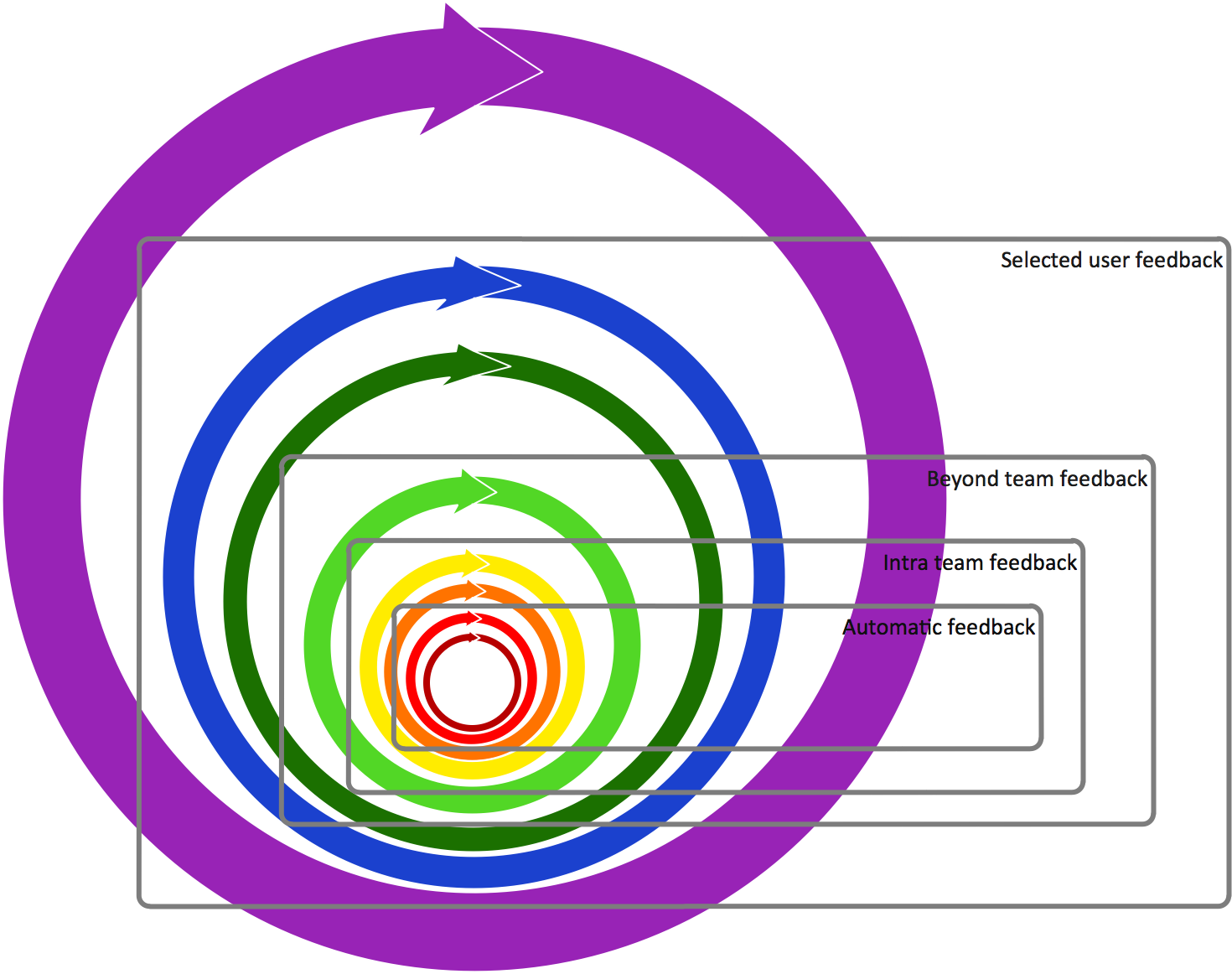

This naturally leads to nested feedback loops. There are at least two, one for product owner feedback and another one for user feedback. But why stop there? Why not introduce more levels of feedback loops depending on the distance of feedback sources?

This of course is not new. Such nested loops have been in place one way or the other for decades. Nevertheless here are the ones I deem most important:

- Implementor himself checks against his understanding, possibly codified in automatic tests. Frequency (f) = minutes

- Continuous integration infrastructure checks against formalized criteria codified in automatic tests. f = minutes to hours

- Human testers possibly do further checks against formalized criteria. f = hours

- Product owner checks against his non-formalized expectations. f = day

- (Internal) stakeholders review larger chunks of increments. f = weeks

- Chunks of increments get pre-released to Alpha testers. The work results now actually get used in practice by different stakeholders. f = weeks

- Chunks of increments get pre-released to Beta testers. f = weeks

- Chunks of increments get released to the rest of the user community. f = weeks to months

As you can see, at least I don't think you need to release to the user community within days or even every other week. You can, maybe you should if at all possible, but you don't need to be working in an Agile manner.

Agility is not about a particular frequency of feedback, but about the right frequency with regard to the uncertainty of the requirements and the fluctuation in the influences from the environment.

But uncertainty is not the only factor to determine frequency. Another one is the distance from a feedback source. And since that can be large, it's prudent not to try to jump from product owner to user community, but to move there in a stepwise manner. At least that's what's feasible for most software endeavors I see during my consulting/training gigs.

There is simply no way to roll out releases to for example 20000 users distributed across 6000 companies every other day. Even though it might be possible technically users don't want to be disrupted that often by changes to their tool.

Instead feedback has to be gathered in decreasing frequency from increasing distance:

The nested feedback loop can be grouped. Between those groups important differences exist. The most important ones to me are:

- Feedback can be automatically or manually gathered.

- Feedback can be from within the production team or from outside.

- Feedback from lay users and technically savvy people

Origin

Regardless of the distance from which feedback arrives and with which frequency it is given, there are two different origins for the interest in it.

A team can push increments in the face of feedback givers – or feedback givers can pull increments from the team. The difference is in the energy and motivation behind feedback.

When pulling, the origin of interest is the feedback giver. When pushing, the origin of interest is the team.

In my view feedback given as a result of pulling is much, much stronger. A feedback giver – from product owner to end-user – pulling on a team constantly makes it very, very clear how important the team's work is. She also clearly states when the next time is she wants to see results and give feedback. It's this appointment which then exerts power. It's like a vacuum pulling in a team's resources. An empty bowl begging to be filled.

Think of a home owner who gets his house built. To me he's the prototype of an interested feedback giver pulling on a team. Every single day a home owner will be on site and check quality and progress. This is clear to everyone on the team. That's a powerful force to focus.

The opposite is a feedback giver who's not really interested in helping a team progress and not waste resources. Such a feedback giver will not make (m)any appointments for feedback himself. Mostly he has other, more important stuff to do. Checking on production process is a burden to him. Why can't the team just deliver? Hasn't everything been stated sufficiently that's required? Anyway, aren't the team members the experts and should know what's required?

The manager or user who's supposed to be also some kind of product owner to me is prototypical for this. In order to get feedback, the team has to push the feedback giver for an appointment to give feedback. Again and again. The team out of sheer necessity is the origin of interest in feedback.

Unfortunately the origin of interest in most projects I see is... the team. This makes it very hard to progress without much waste. Also the overall energy is low and comparatively unfocused. Distractions (e.g. bug fixes, meetings) abound.

On the other hand, whenever there is an obviously interested feedback giver who's constantly pulling on the team, all forces align in her direction. Much more progress is made, less waste is produced, motivation is higher.

Mode

Once an increment is in front of a feedback giver there are two modes he can deal with it: use it or watch a presentation.

Needless to say, a presentation is the low energy mode. If work is just presented feedback givers can lean back – and doze off. They don't really need to get involved. That's why I don't like the term "review" as in "sprint review meeting". It suggests a presentation, it opens the door for energy to flow out, while seemingly doing the right thing: getting feedback.

Also if results get presented the presenter can manipulate the experience of the feedback giver. Simply don't show or mention missing features, maybe the feedback giver won't realize it.

This is different, when not just watching, but actually using results. A feedback giver then is more unpredictable, which again exerts a pull on the team. He's also more involved, more interested if he invests time and brain power to actually get involved. That's high energy.

Value

Agile teams constantly produce increments; that's changes some stakeholder can give feedback on. But how big should those changes be? Agile literature talks about value here. They should be valuable or usable, make a difference in practice.

Surely that's the ideal. But in my view this limits the frequency for feedback. Producing something of real value is much harder than producing something on which a stakeholder can give feedback.

I suggest thinking like this: feedback over value. Or information over value.

Because creating information is in situations of uncertainty of value itself, regardless of whether a user gets something in his/her hand to use on the job.

To illustrate this think of a simple requirement: a login dialog.

The user should log in when starting a software get authenticated and authorized. This is how the dialog should look:

When starting the software, the login dialog appears filled with the user name used on the previous successful login. The OK-button is disabled until both user name and password have been entered. If the user cannot be authenticated a message will be displayed; the user can try to log in again. If authentication is successful the main dialog is shown with all options disabled for which the user has no permission.

If the "Keep me signed in" check box had been enabled upon previous login no dialog appears. The user's name and permissions are then read from a cache. Only after the cache expires (default: 2 weeks) an explicit login is required again.

How would you decompose these requirements into increments? How many stories would you write and put on a Scrum board?

Here's a feature tree I'd come up with:

Of course any user would want all those features to be implemented at once. And why give feedback before that's been done? Only the whole login dialog would be considered valuable – not just part of it.

But then, if hard pressed, a user maybe would concede to give feedback for just the branch of the "Press OK" interaction without the other interactions ("Open" and "Enable OK"). That's why "Press OK" is colored green.

Yellow increments are those which a user might still find pretty tangible if implemented. There could be a login dialog which only authenticates, but does not authorize a user yet; instead all users would be logged in with some default role.

Finally orange increments don't provide any perceived value and are hardly tangible for a user.

Bottom line: Green provides clear value and could be released on its own. Yellow adds less value and could not be released on its own. Orange provides even less value.

All features are of course valuable – but some have more value than others. There is a value hierarchy.

The question now for any development team is: When to ask for feedback? Implement everything because that's what users want anyway in the end? My recommendation is: No, pick any, yes, any feature that's important and small, so you can get feedback quickly.

Examples:

- Just implement the "Enable OK" feature.

- Just implement "Show previous name" upon "Open" (with a never expiring cache).

- Just implement "Authorize" without (!) the dialog using a test bed.

- Just implement "Open" and "Show main dialog" with a fixed permission set to show how a user can navigate through the program.

My point is: All increments have some value. But that does determine any scope that needs to be implemented before getting feedback. Rather look at the frequency of a feedback cycle and choose increments on any level which fit it. If that means, you need to build a special test bed, so be it.

If you start with low value increments over time you'll build high value for the user. Until then, though, you'll have gathered important feedback on user expectations and/or technologies.

A Checklist for Giving Feedback in Agile Projects

What does all that mean for the feedback to seek/give?

- Feedback should be given in a frequency matching the uncertainty of requirements and environment.

- Feedback should be given on several levels in different frequencies.

- The interest in giving feedback must be with the feedback givers; they need to pull on the team.

- Feedback should be given as a reaction to actually exercising/using the system as a feedback giver.

- Feedback givers need to give feedback to increments, but don't let them insist on high value increments all the time.